How Hard Could It Be, Part 4.

(This follows parts 1, 2, and 3.)Okay, where do we stand? Our current implementation uses some basic statistics to try to separate the background of an image from the foreground. It accomplishes this by looking at each pixel individually, and deciding whether or not the pixel has changed enough that it couldn't have happened due to noise in the image. Such an approach looks like this:

Current approach

Really, it isn't bad. But what we'd like is this:

Ideal case

So there's still work to do. In particular, the current attempt introduces a lot of fuzziness: many places are mixed background and foreground pixels, oftentimes alternating back and forth across a large area. It'd be great if we could reduce that fuzz, and produce more uniform areas of background and foreground. That'd look a lot more like the ideal case.

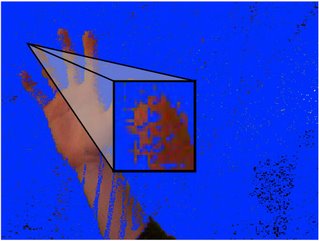

Here's a blown-up piece of the top image:

Close-up of finger

Ideally this would be a sharply defined and completely flesh-colored fingertip, surrounded by solid blue. But how could we create that? Well, maybe it'll happen if we can just reduce the fuzziness and get some sharp edges. We might be able to do that by looking at pixels differently: not as individual cases, but in groups.

The algorithm we'll use, which I completely made up, we'll call run-length detection because it looks at a group (or "run") of fifteen* pixels all at once.

A run of pixels

Here's a strip of fifteen consecutive pixels from the zoomed-in area. What the run-length detection algorithm does is:

Starting at the top left corner of the image, look for a blue (background) pixel followed by a non-blue (foreground) pixel. Since this is potentially where the image switches from background to foreground, we'll try to reduce fuzziness in this area.

Count off the next thirteen pixels, to make a run of fifteen.

Count the number of background pixels in the run.

Count the number of foreground pixels in the run.

If there are ten or more foreground pixels, set all of the pixels in the run to foreground.

Otherwise, set all of the pixels in the run to background.

In the example above, there are nine background pixels and six non-background pixels. The algorithm will set all of these pixels to be background:

Setting all of the pixels as background

We'll apply this algorithm to runs both vertically and horizontally, in order to reduce detection fuzziness in both dimensions. And, we'll also try the run-length detection inverse: instead of looking for a background pixel followed by a foreground one, look for foreground followed by background.

After doing all this, the image looks like this:

Standard deviation + run-length detection

That really worked. There's still some false positive in the lower right, where the black was so noisy that lots of foreground detection was produced, but the hand is so much more clearly defined that this algorithm still helped. It also cleaned up all of the blue showing through the arm and palm.

Things are looking pretty good: our image is getting close to the ideal case. The next thing to do is try to smooth out those sharp edges around the fingers, and try to make a more natural-looking outline around the entire limb.

* The run and threshold numbers were arrived at empirically, just by fiddling around for awhile.

2 Comments:

I am not commenting because I have nothing useful to add, but this series is interesting.

Thanks! I appreciate it.

Next part will be Monday night, btw.

Post a Comment

<< Home